Program

The number of places at the workshops and roundtable sessions are limited. So reserve your spot now!

Pre-FEARS workshops

2 September

14:00-17:00

Workshop: How to develop an academic poster?

Prepare for the poster session by following this workshop.9 September

14:00-17:00

Workshop: How to pitch your research for a broader audience?

Prepare for the pitch session by following this workshop.17 September

14:00-17:00

Workshop: Pitching yourself to companies or in the job market.

Prepare for the industry roundtable session by following this workshop.FEARS 2025 program

12:30

Registration & Poster setup

All attendees are welcome from 12.30pm to enjoy a coffee. Presenters can install their demo setups and posters.

13:00

Dean speech

FEARS will open with an opening speech by Patrick De Baets, the dean of FEA.

13:15

Keynote by prof. Andrés Vásquez Quintero, CTO & Co-founder of Azalea Vision

Professor Andrés Vásquez Quintero is the Chief Technology Officer and Co-founder of Azalea Vision, a MedTech spinoff from Ghent University and imec that is developing smart contact lenses. He also serves as an Associate Professor at UGent. Professor Vásquez Quintero was instrumental in guiding the Azalea project from its initial incubation at UGent/imec to its industrialization as a spinoff company. Under his leadership, the company has secured €15M in venture capital investment and €5.8M in public grants, and has grown the team from one to 17 people with a focus on manufacturability and clinical strategy. His technical expertise in areas like flexible electronics, thin films, and MEMS is supported by more than 20 A1 publications and 12 patents.

13:45

(Parallel sessions)Poster and demo session (Part 1)

13:45-14:40 @ Corridor

Stroll through interesting posters and demonstrations of FEA research and take the time to get to know colleagues.

Submit your poster or demo

Detailed poster and demo schedule

Workshop 1: Your PhD in a CV

13:45-14:25 @ Lady's Mantle

Get to know the do’s and don’ts for drafting a cv with an academic background.

Register your spot at the workshop

More info on the workshops page

Workshop 2: Funding for entrepreneurial postdocs

13:45-14:25 @ Lavender Room

An introduction to the different TechTransfer practices for postdocs and finalising PhD researchers.

Register your spot at the workshop

More info on the workshops page

14:30

(Parallel sessions)Coffee break

14:45-15:15 @ Corridor & Chapter House

Take a break and enjoy a coffee while networking with your colleagues, industry professionals, and other researchers.

Roundtable session 1

14:30-15:10 @ Cellarium

Dive deep into hot topics about R&D after a PhD in engineering and architecture with leading companies at our Industry Roundtables.

More info on the roundtable page

15:15

(Parallel sessions)Pitch session

15:15-15:55 @ Chapter House

Get inspired by a selection of two-minute pitches by FEA researchers.

Submit your pitch

Detailed pitch schedule

Workshop 3: Pursuing a doctoral degree

15:15-15:55 @ Lady's Mantle

Information session for students. We help you figure out whether a PhD is something for you and how you can start one.

Register your spot at the workshop

More info on the workshops page

Start your own Spin-off

15:15-15:55 @ Lavender Room

In this session, we will explain what a UGent spin-off is and how you start the process towards a spin-off, with room for your own questions on the topic.

16:00

(Parallel sessions)Poster and demo session (Part 2)

16:00-16:55 @ Corridor

Stroll through interesting posters and demonstrations of FEA research and take the time to get to know colleagues.

Submit your poster or demo

Detailed poster and demo schedule

Roundtable session 2

16:00-16:40 @ Cellarium

Dive deep into hot topics about R&D after a PhD in engineering and architecture with leading companies at our Industry Roundtables.

Register your spot at the table

More info on the roundtable page

17:00

Panel Session: Beyond the Dissertation — Building a Spin-Off

How do researchers enter a spin-off trajectory? And how do they finally start their own business? 5 recent and future spin-off founders, all current or former FEA researchers, share their experiences on different aspects during this challenging but rewarding journey.

Panel members: Ahmed Selema (Drive13), Ewout Picavet (FFLOWS), Thomas Thienpont (Pyro Engineering), Ashkan Joshghani (Exoligamentz), and Alexandru-Cristian Mara (Nobl.ai)

Moderator: Simon De Corte

17:45 - 19:30

Award ceremony and reception

We will close FEARS 2025 with a drink and some bites, while presenting awards for remarkable contributions to the symposium.

Pitch sessions

Pitch sessions are organized in the Chapter House. Please find the times of pitches below.

Poster and demo sessions

Poster and demo sessions are organized in the Corridor. You can find the poster IDs and their session below.The first poster session takes place from 13:45 to 14:40. The second poster session takes place from 16:00 to 16:55.

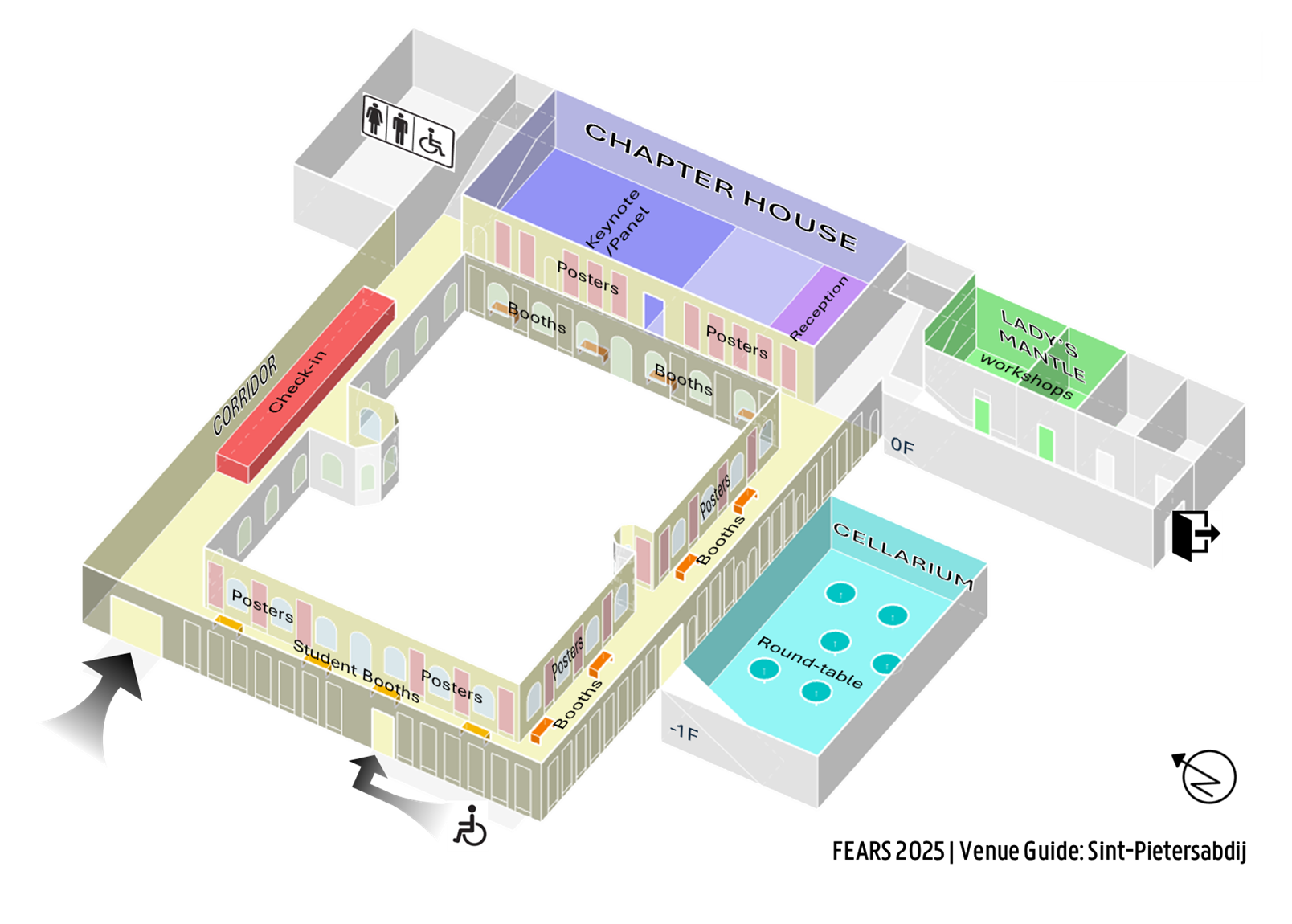

Floor plan